MediaPipe Audio Event Classification

During the Google Summer of Code, my primary project was to build a brand-new Cross-platform Solution using the MediaPipe Framework.

After much discussion with my mentor, we agreed on building an audio-event classifier Solution using the Mediapipe Framework. This API can be used on any device, ranging from high-performance systems to mobile devices. Under the hood, we're using the Google Yamnet Audio Event Classifier which has been trained on audio events from the AudioSet Ontology.

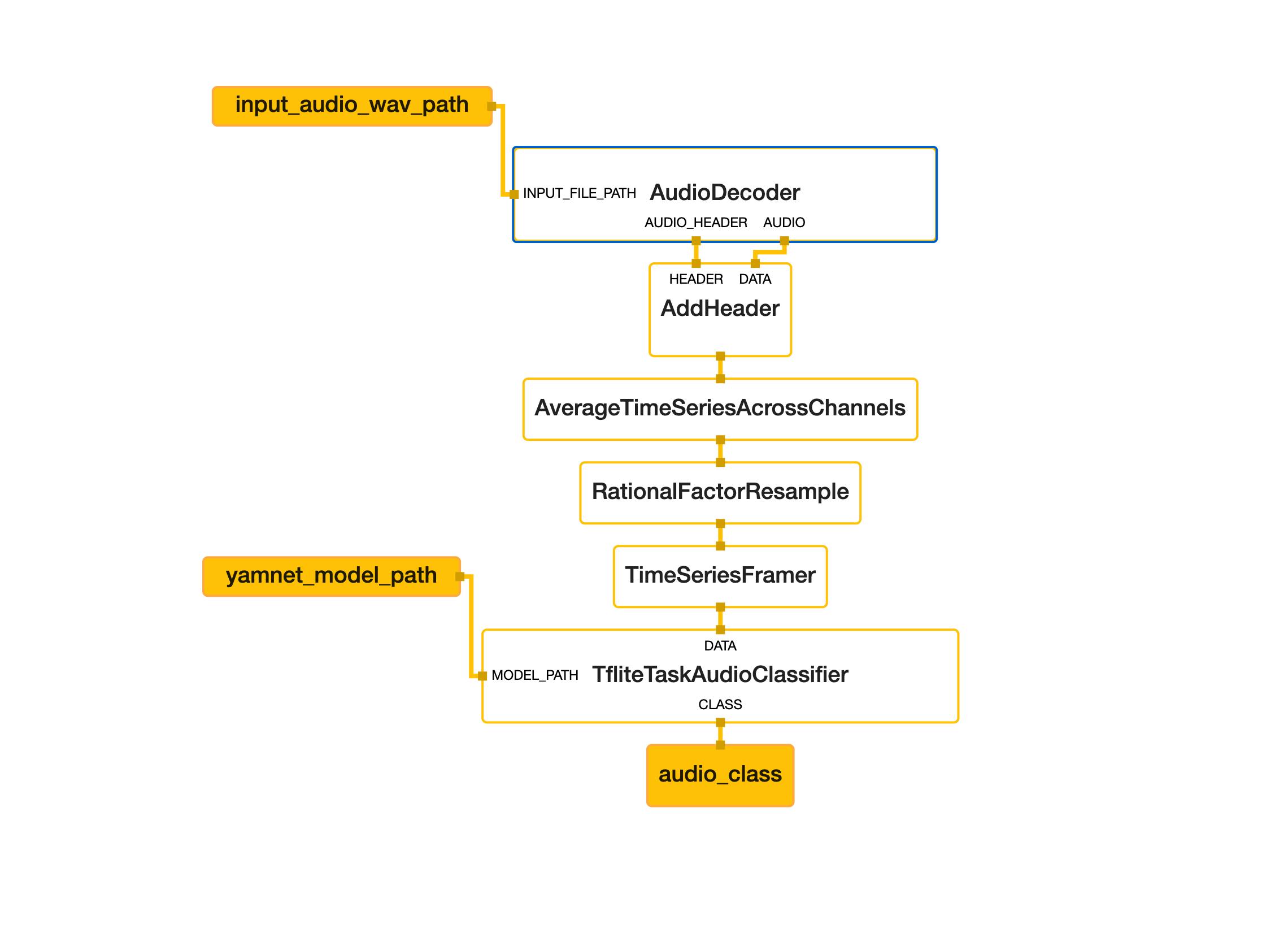

MediaPipe takes a graph-based approach where we define packet flow paths between nodes (also refers to as calculators) that produce and consume packets along with doing the major computations. (Read More)

The calculators used for the solution are:

- AddHeaderCalculator

- AverageTimeSeriesAcrossChannelsCalculator

- RationalFactorResampleCalculator

- TimeSeriesFramerCalculator

- TfliteTaskAudioClassifierCalculator

Concept

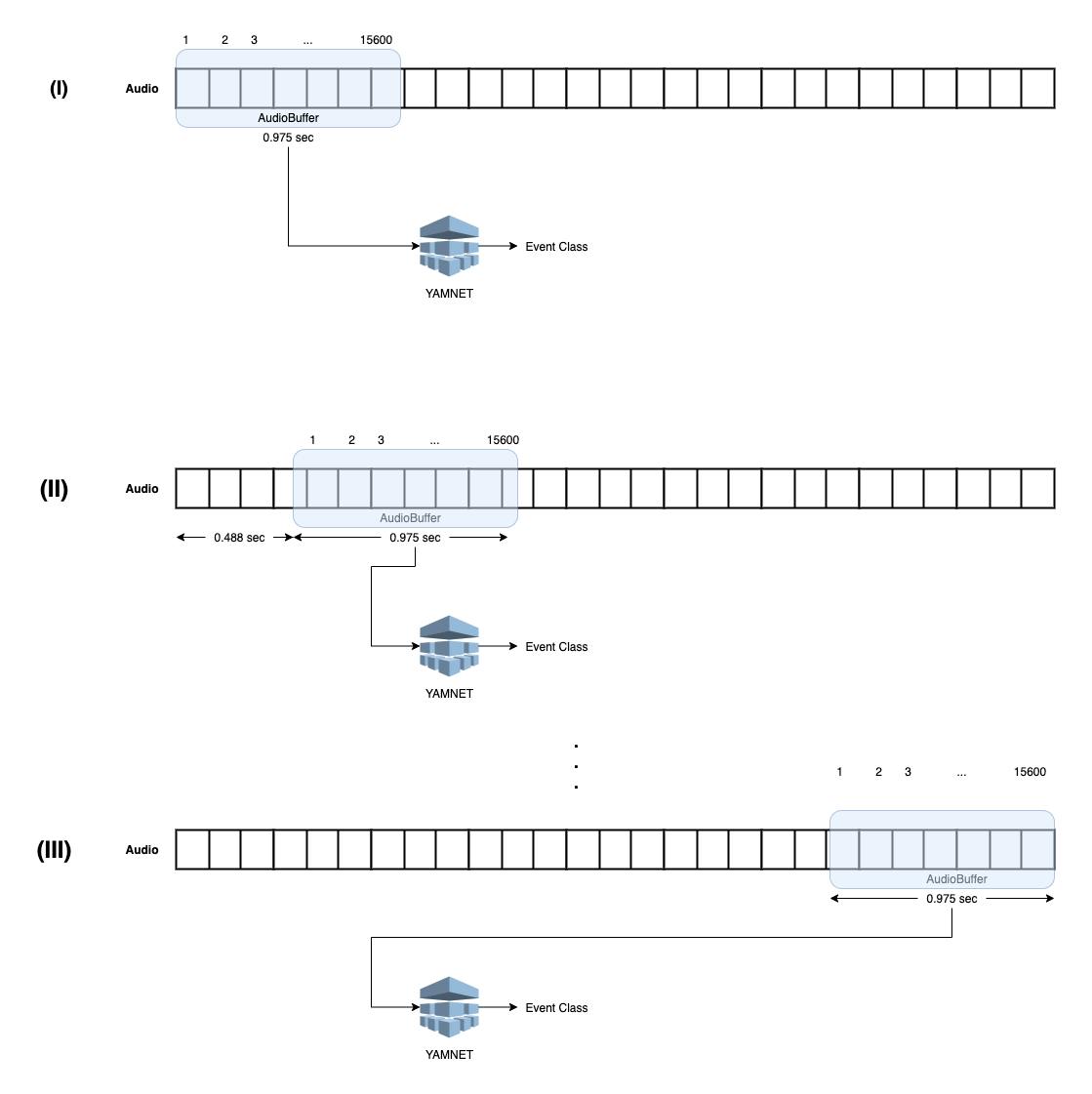

In the beginning, an Audio file is being decoded into a Matrix which is further passed on to AddHeader Calculator where audio headers are added to the matrix. AverageTimeSeriesAcrossChannels Calculator converts the audio to mono audio which is a requirement for the YAMNET Audio Classifier. RationalFactorResampleCalculator resamples the mono audio to 16Khz and after dividing into buffers of 0.975 secs with a hop time of 0.488 secs, the buffer is passed on to the TfliteTaskAudioClassifier Calculator where the actual classification happens from the audio matrix and the event class (such as Animal, Silence, Cat, Crackers, etc.) is returned.

Usage

# Clone repository

git clone https://github.com/aniketiq/mediapipe.git

# Download the model:

curl \

-L 'https://tfhub.dev/google/lite-model/yamnet/classification/tflite/1?lite-format=tflite' \

-o /tmp/yamnet.tflite

# Download the audio file:

curl \

-L https://storage.googleapis.com/audioset/miaow_16k.wav \

-o /tmp/miao.wav

# checkout the repo

cd ./mediapipe

# Build the Audio Classifier

bazel build \

-c opt --define MEDIAPIPE_DISABLE_GPU=1 \

mediapipe/examples/desktop/\

audio_classification/audio_classification_cpu

# Run the Audio Classifier with the audio

GLOG_logtostderr=1 \

bazel-bin/mediapipe/examples/desktop/\

audio_classification/audio_classification_cpu \

--calculator_graph_config_file=mediapipe/graphs/\

audio_classification/audio_classification_desktop_live.pbtxt \

--input_side_packets=yamnet_model_path=/tmp/yamnet.tflite,\

input_audio_wav_path=/tmp/miao.wav \

--output_stream_file=/tmp/class.txt && cat /tmp/class.txt